Model registry & deployment

Deploy and manage models in production

Streamline your ML workflows with MLflow's comprehensive model registry for version control, approvals, and deployment management.

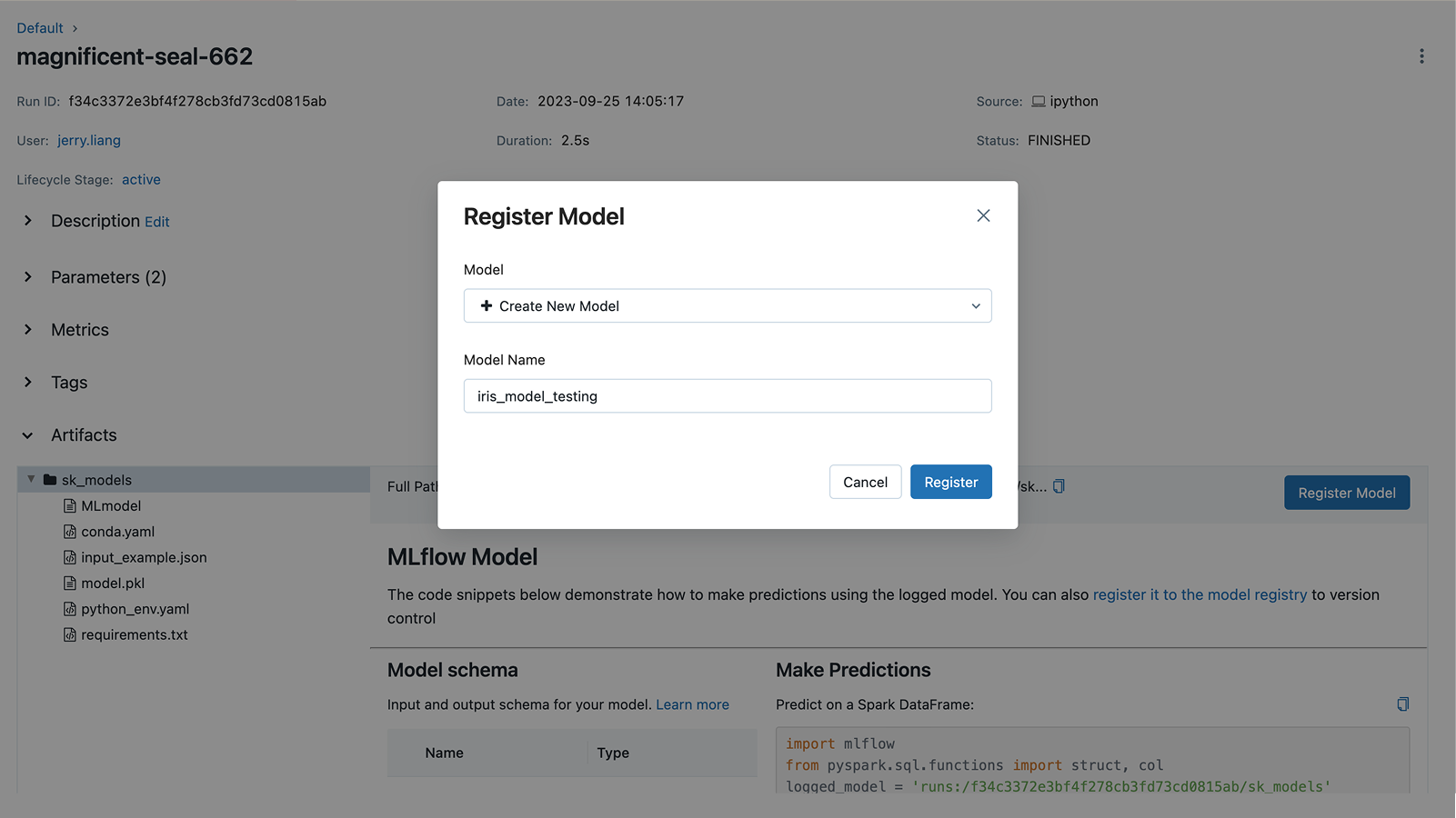

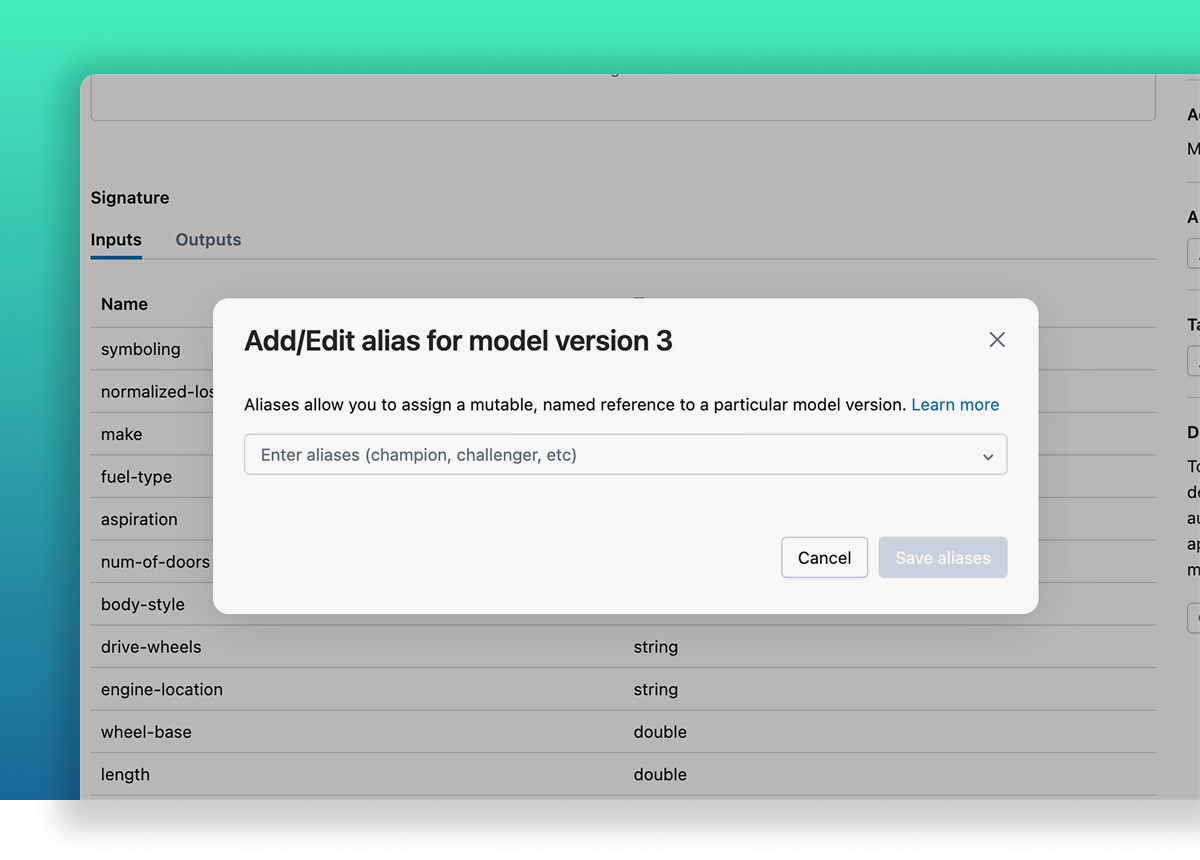

Model registry

Stage-based model lifecycle management

Move models through customizable staging environments (Development, Staging, Production, or any stage alias you choose) with built-in approval workflow capabilities and automated notifications. Maintain complete audit trails of model transitions with detailed metadata about who approved changes and when they occurred.

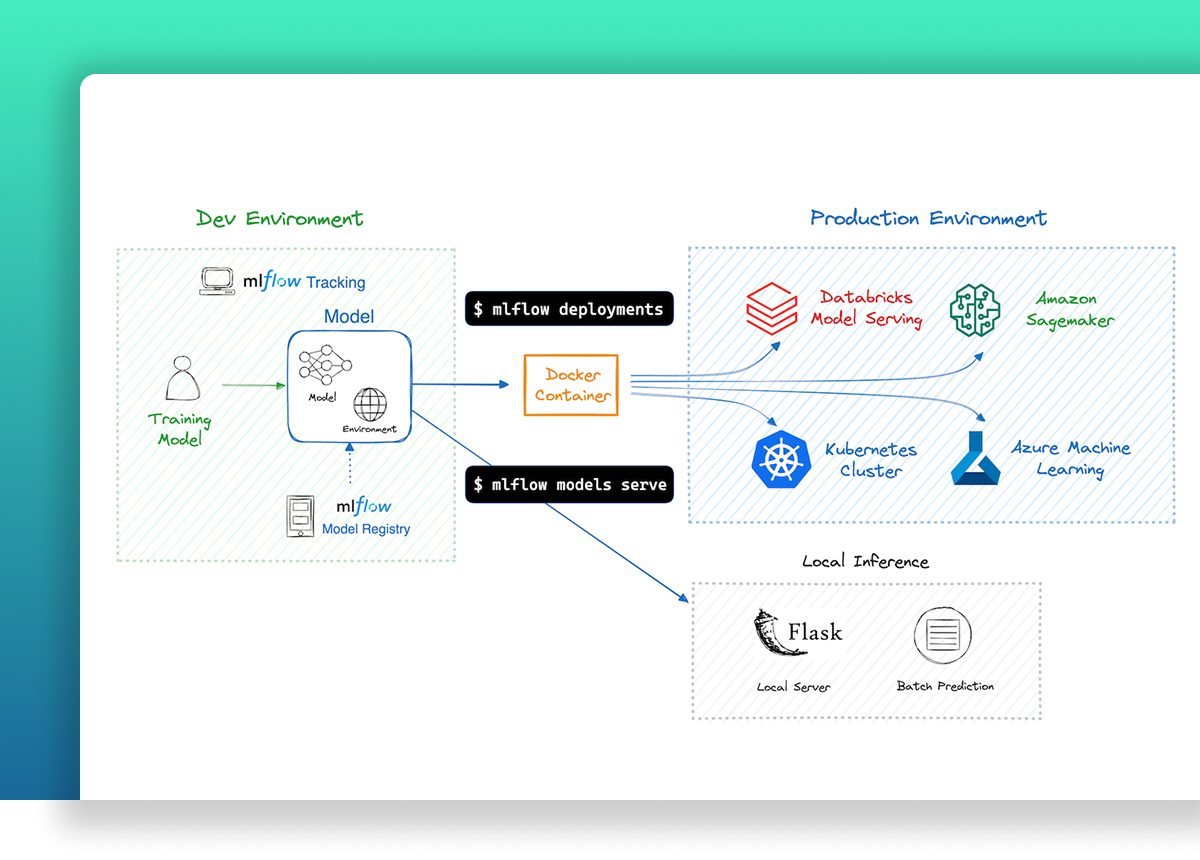

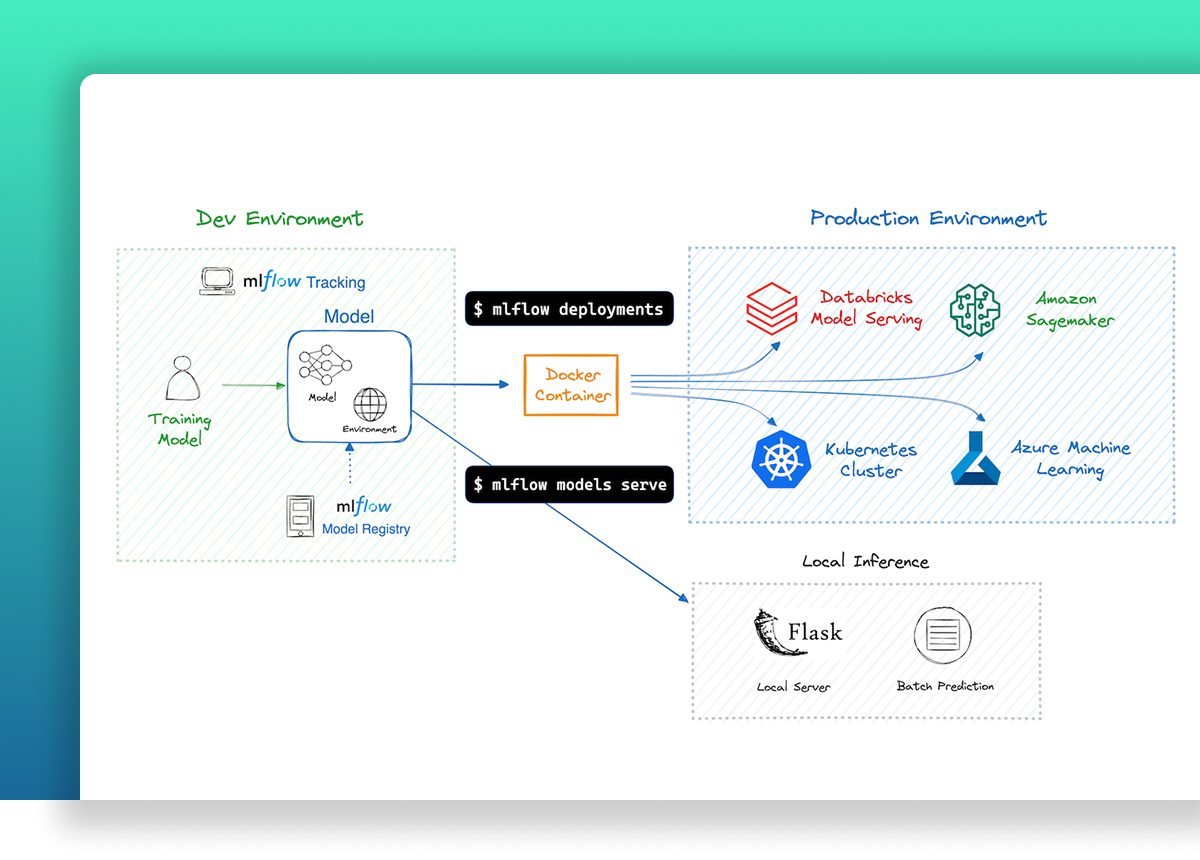

Model deployment flexibility

Deploy models as Docker containers, Python functions, REST endpoints, or directly to various serving platforms with MLflow's versatile deployment capabilities. Streamline the transition from development to production with consistent model behavior across any target environment, from local testing to cloud-based serving.

Model serving

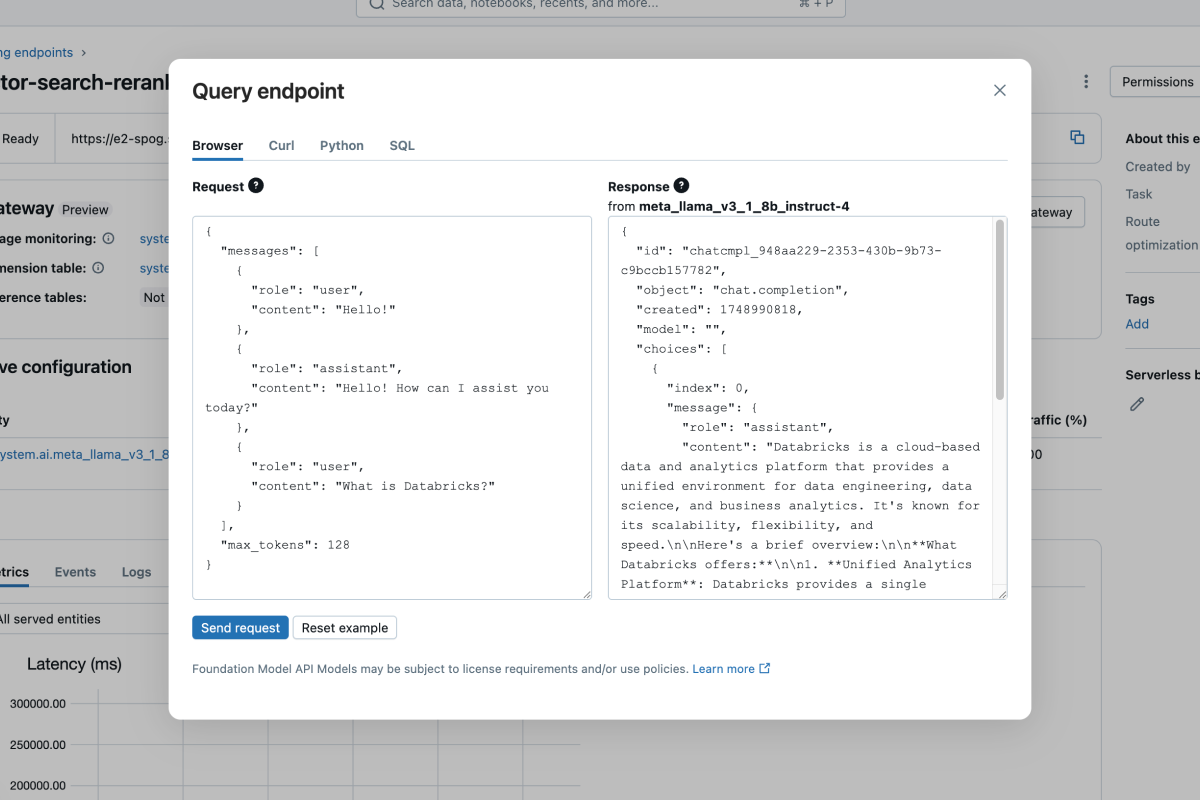

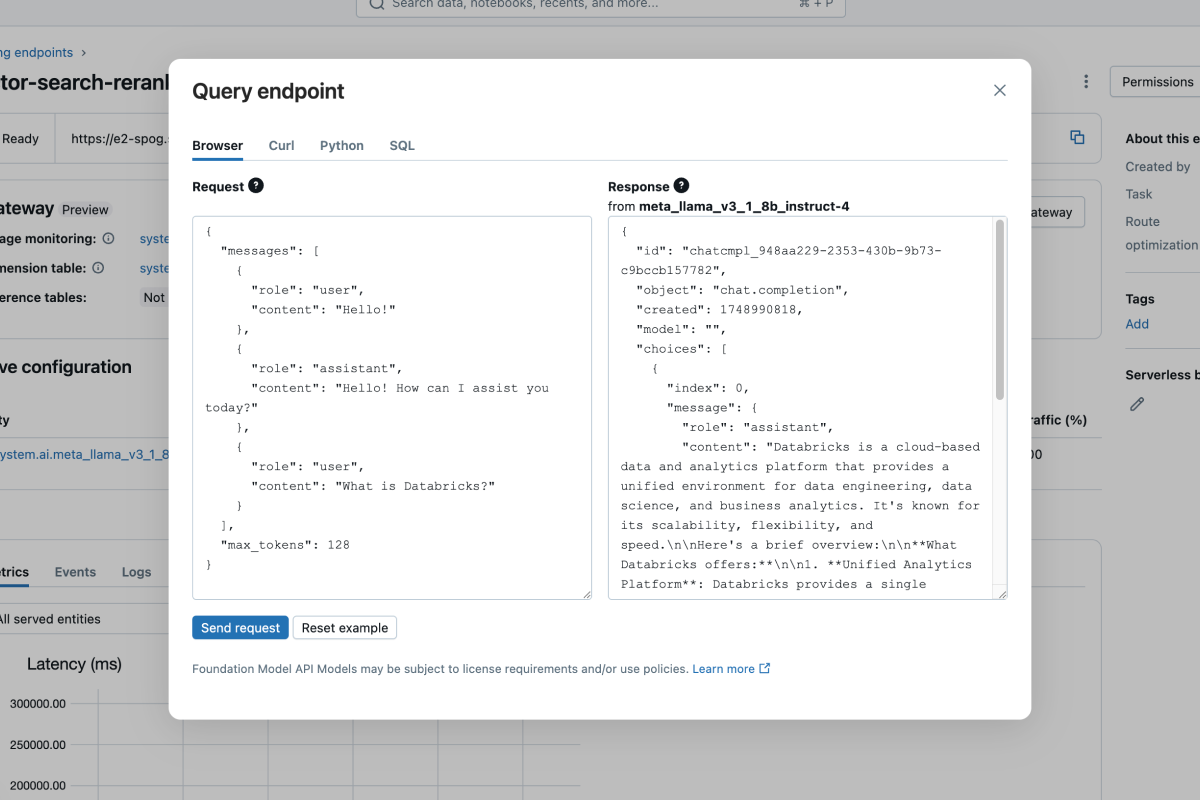

Scalable Real-Time Serving

Databricks Model Serving provides a unified, scalable interface for deploying models as REST APIs that automatically adjust to meet demand fluctuations. With managed deployment on Databricks, your endpoints can intelligently scale up or down based on traffic patterns, optimizing both performance and infrastructure costs with no manual intervention required.

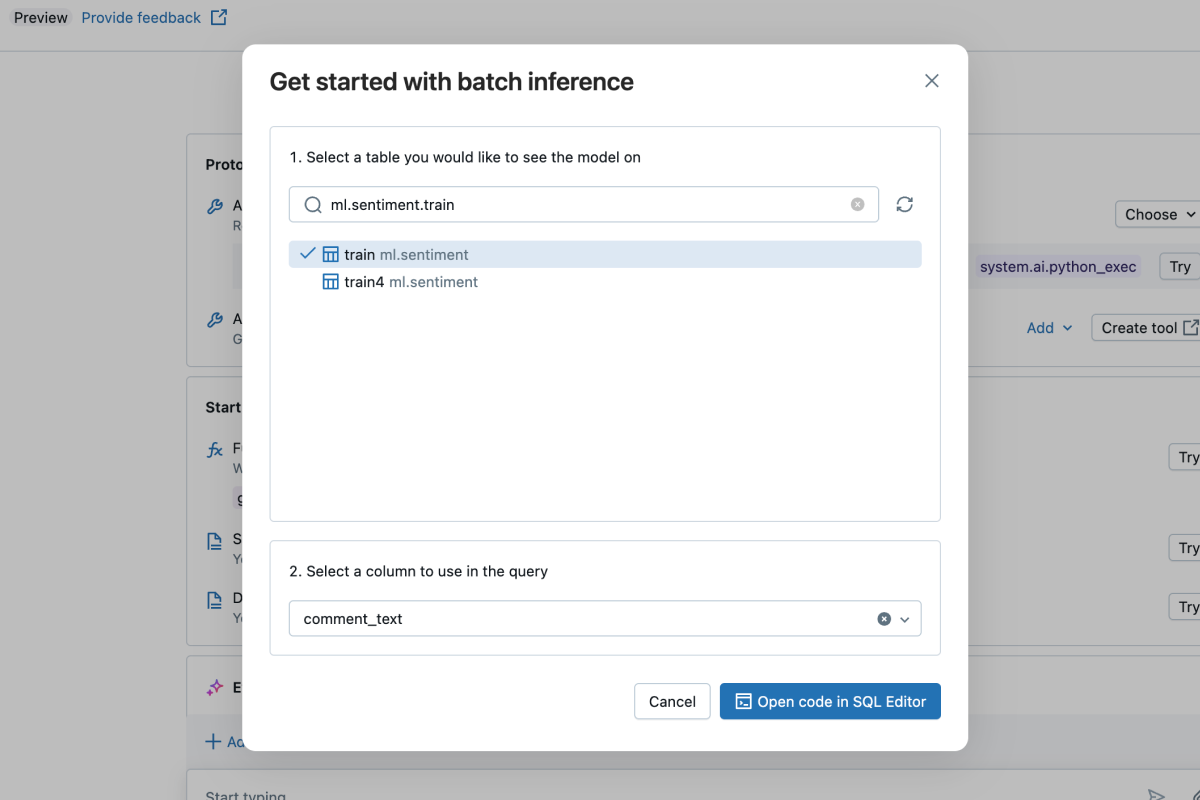

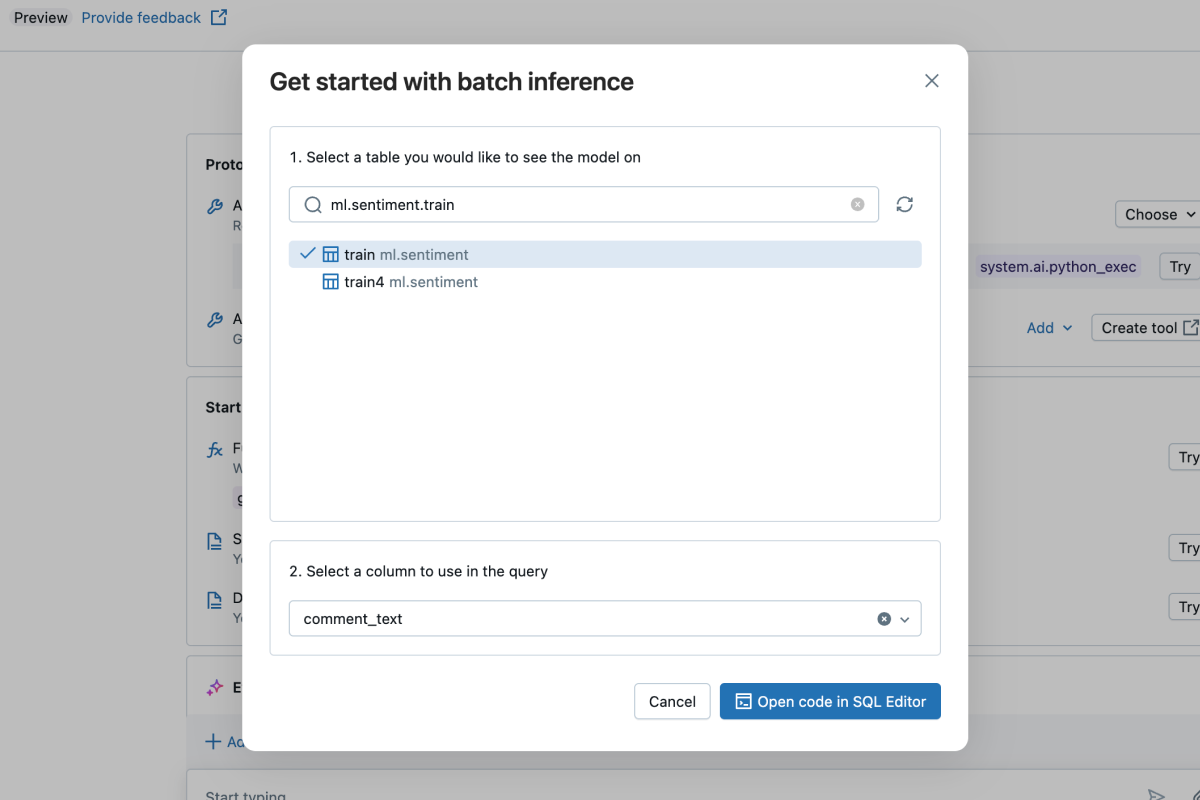

High-Performance Batch Inference

Deploy production models for batch inference directly on Apache Spark, enabling efficient processing of billions of predictions on massive datasets

Get started with Managed MLflow

GET INVOLVED

Connect with the open source community

Join millions of MLflow users